ChatGPT was launched in November 2022, and it changed our world as we knew it. Since then, Large Language Models (LLMs) have integrated into our daily workflows enhancing our productivity and the quality of our work.

Another interesting milestone happened in February 2023, when Meta released the Llama LLM under a noncommercial license:

This sparked the enthusiasm among numerous developers dedicated to advancing LLMs, leading to a increase in collaborative efforts and innovation within the field. A good example is the Hugging Face Model Hub where new models are constantly published:

Developers started creating improved models and optimizing performance for local execution of LLMs on consumer-grade hardware.

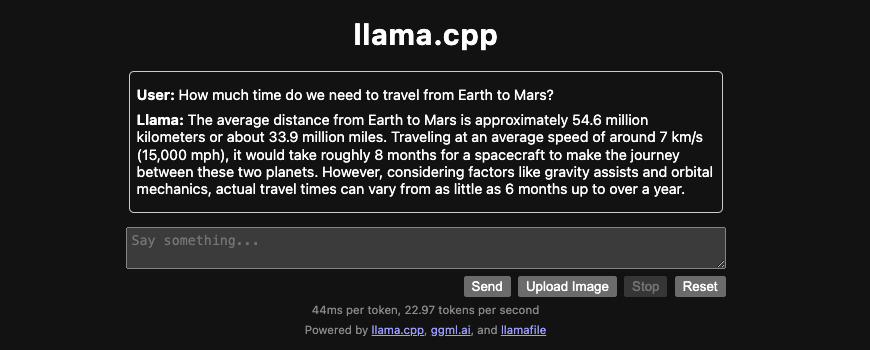

Llama.cpp is a port of Llama to C++, started in March 2023 with a strong emphasis on performance and portability. It includes a web server and an API:

https://github.com/ggerganov/llama.cpp

Mistral 7B was released in October 2023, achieving better performance than larger Llama models and demonstrating the effectiveness of LLMs in compressing knowledge.:

https://huggingface.co/papers/2310.06825

And now it’s easier than ever to locally execute LLMs, especially since November 2023, with the Llamafile project that packs Llama.cpp and a full LLM into a multi-OS single executable file:

https://github.com/Mozilla-Ocho/llamafile

It’s even possible to run LLMs in a Raspberry Pi 4, like the TinyLlama-1.1B used from a llamafile in this project:

https://github.com/nickbild/local_llm_assistant

And about using LLMs for code generation (Github’s Copilot has been available since 2021), there are IntelliJ plugins like CodeGPT (with its first release in February 2023) that now allows you to run the code generation against a local LLM (running under llama.cpp):

https://github.com/carlrobertoh/CodeGPT

Google is a bit late to the party. In December 2023 they announced Gemini. In February 2024, they launched the Gemma open models, based on the same technology than Gemini:

https://blog.google/technology/developers/gemma-open-models

They also released a gemma.cpp inference engine:

https://github.com/google/gemma.cpp

And finally, if you are lost among so many LLM models, an interesting resource is the Chatbot Arena, released in August 2023. It allows humans to compare the results from different LLMs, keeping a leaderboard with chess-like ELO ratings:

And according to this leaderboard, at the moment GPT-4 is still the king.